3D Scene Reconstruction of A Single Monocular Photos by Estimating the Orientation of Rectangles

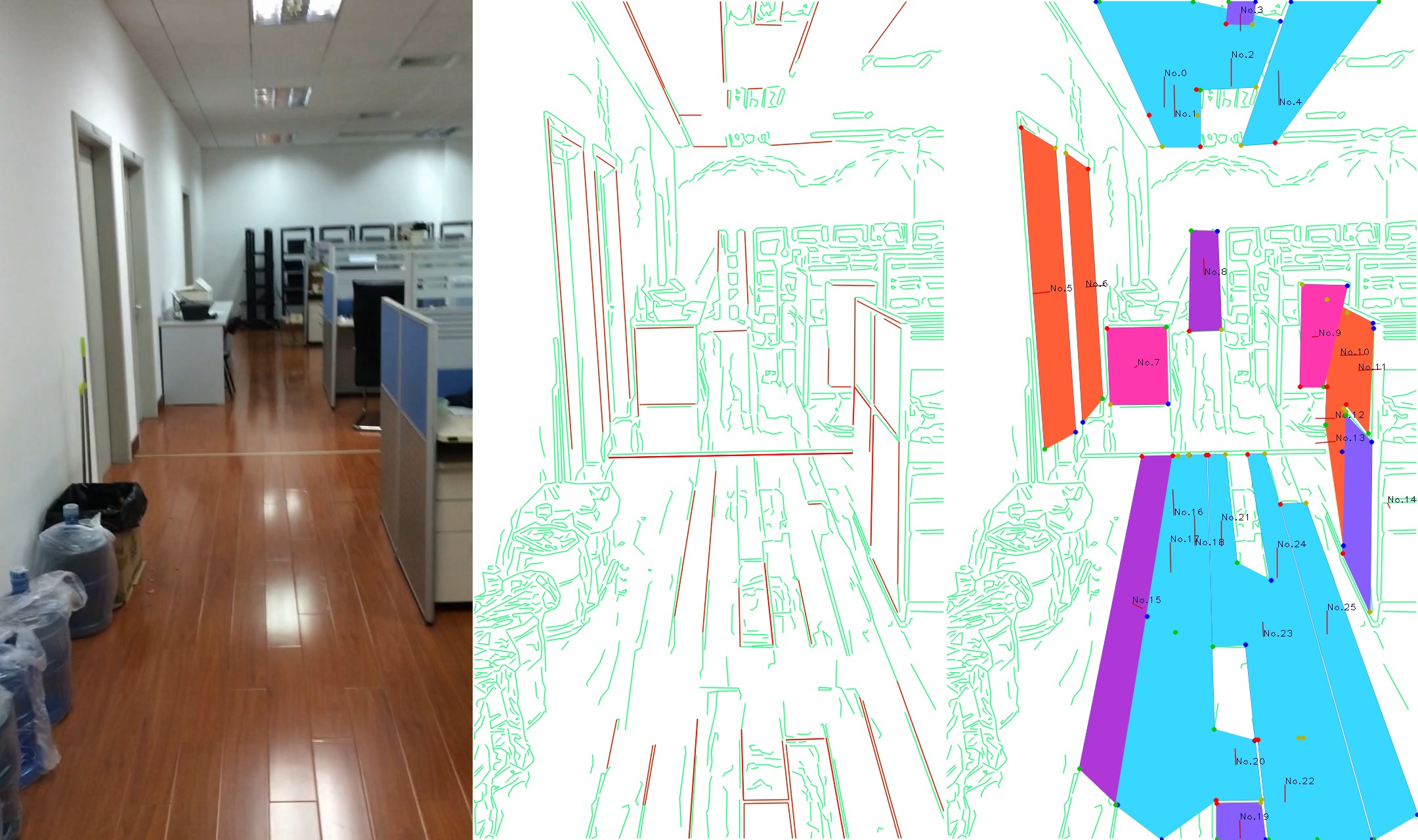

Demo

(No.15 showed an error due to low photo resoluction)

Description

This is just part of my undergraduate thesis about “Scene Understanding for Robots” which is still under constrction, so that this post may seem unfinished. I’m sorry for that, but you are always welcomed to contact me[AT]qzane.com for details if you are interested.

Original Photo(Perspective projection)

Finding Strong Outlines and Potential Rectangles (Perspective projection)

This is actually a critical section of this task, and I’m currently using some empirical methods to find potential spacial rectangles like using the completeness of angles formed between lines. However the result is not that good and I am trying some new models with the help of NYU Depth Dataset V2 and Stanford 2D-3D-Semantics Dataset.

Questions and suggestions by email are always welcomed!

Surface Oriantation Estimation(Perspective projection)

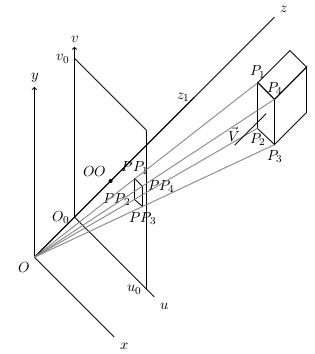

Detailed reasoning process can be downloaded here. The following is an outline.

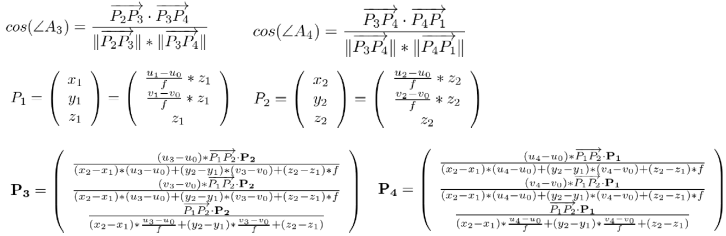

As shown in the picture above. is the optical center of the camera and

is its optical axis.

is a 3D orthogonal coordinate system and

is the image plane with

as its image center.

we are going to estimate the oriantation of the , or more specifically: its normal vector

, with its projection

on the image plane.

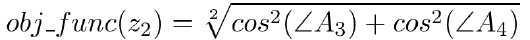

The problem can be reduce to find the value of which can minimal the value of our object function:

where

(or any other constant, all of which will lead to the same result of

)

Since our object function has only one variable(), there are dozens of methods to find its minimal, I tried particle swarm optimization (PSO), and it worked well.

Once we get the value of , we get the value of

, with which, we can calculate the value of

easily.

3D Scene Reconstruction(Orthographic projection)

Once we have the oriantations of all rectangles, we can infer their relative positions from the intersections in the photo and eventually reconstruct the whole scene. Although the low resoluction and some potential distortion in the photo may cause some error, they won’t have large impact to the whole model because the oriantations are calculated independently.

Datasets

I’m currently trying to do some qualitative analysis using NYU Depth Dataset V2 and Stanford 2D-3D-Semantics Dataset.